Building a RAG-Powered Knowledge Engine for African Countries

End-to-End Semantic Processing with ZenML, MongoDB, and Opik

As the use of large language models (LLMs) becomes more widespread, there is growing demand for systems that can generate accurate, grounded, and culturally contextual content-especially in underrepresented domains like African knowledge. This project introduces a scalable, modular pipeline that extracts, summarizes, evaluates, and semantically indexes content on African countries using Wikipedia as the primary data source. The goal: to power retrieval-augmented question answering (RAG) with verifiable and relevant answers.

System Overview

Our architecture is built using modern, modular components:

ZenML serves as the orchestration backbone, providing structured pipelines for ETL (Extract, Transform, Load), summarization, evaluation, and dataset generation.

Wikipedia is used as the source of high-quality, crowd-curated information.

MongoDB acts as a flexible document store for raw articles, summaries, and vector embeddings.

Opik, an LLM observability platform, is integrated for real-time evaluation of summaries and Q&A outputs using metrics like BERTScore, Cosine Similarity, and hallucination detection.

RAG architecture bridges the vectorized content to end-users by combining retrieval (from indexed summaries/articles) and generation (using LLMs) to answer complex user queries.

The project structure is shown below, using uv in the project scaffolding, if you are not familiar with uv, you can read my introduction to that here

Our pipeline involves:

📄 ETL steps to fetch and structure Wikipedia content.

✂️ Summarization steps that generate multiple concise variants per country.

📊 Evaluation metrics like BERTScore and Cosine Similarity to score the summaries.

🧠 Vector indexing of articles and summaries for RAG-based semantic search.

🔍 Q&A evaluation for answer relevance and hallucination detection.

📦 Key Pipeline Steps

ETL Workflow

Using ZenML steps, the system:Crawls Wikipedia articles for each African country.

Parses the Table of Contents (TOC) and relevant sections.

Cleans and ingests the data into a document collection in MongoDB.

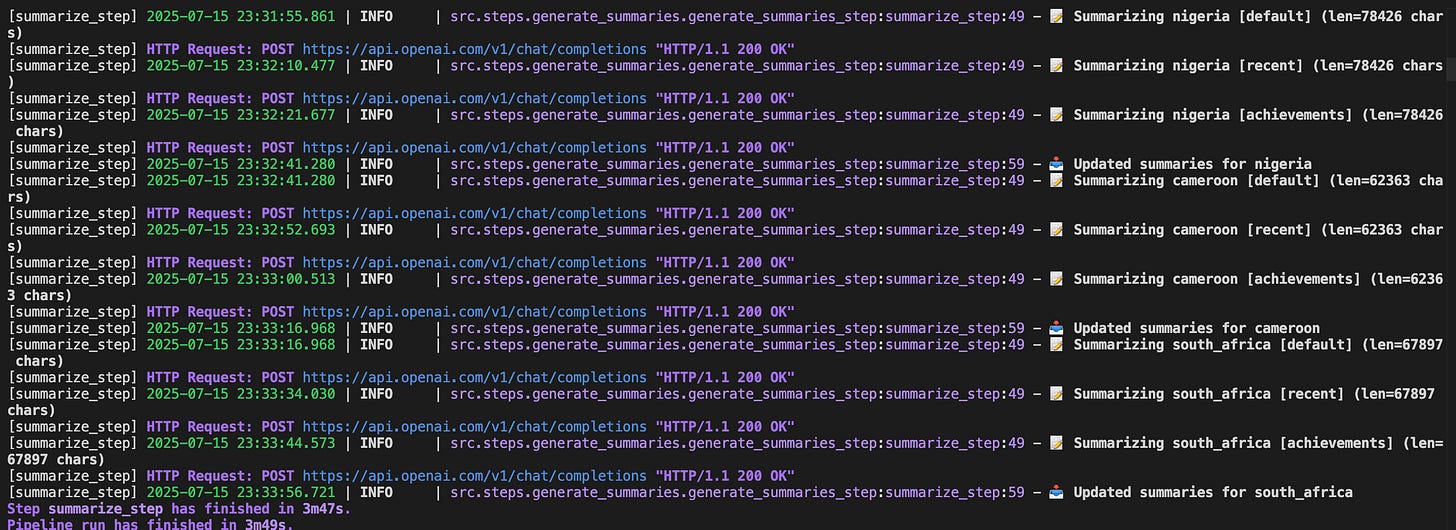

Summarization

Each country’s article is summarized into multiple variants (e.g., “default”, “recent”, “achievements”) using prompting strategies. This diversity supports multiple downstream use cases (e.g., news flashbacks, national achievements, and history overviews).Evaluation & Observability

Generated summaries are evaluated with Opik, which applies BERTScore, Cosine Similarity, and combined metrics to track performance across countries and summary types. This step ensures factual consistency and linguistic quality.Vector Indexing for RAG

Summaries and full articles are encoded into embeddings and stored in a vector collection inside MongoDB. This forms the basis for retrieval during inference time.Q&A Dataset and Evaluation

A question-answer dataset is created using ZenML and evaluated using Opik’s metrics for answer relevance and hallucination rates. This helps benchmark how well the RAG system can ground answers in trusted knowledge.

1. ETL Pipeline (ZenML Orchestrated)

The pipeline begins by extracting Wikipedia content for each African country.

Crawl Step: Uses the MediaWiki API to fetch the article structure and content of each country (e.g., Senegal, Nigeria).

Parse Step: Cleans and structures the raw HTML, removing unnecessary tags and formatting.

Ingest Step: Stores the cleaned article into MongoDB under the

document_collection.

📦 Storage Target: MongoDB

📘 Content: Full structured Wikipedia articles for each country.

2. Summarization Step

Using ZenML, three distinct summaries are generated per country article:

Summary 1→ Default (overall profile)Summary 2→ Recent (current developments or recent history)Summary 3→ Achievements (key milestones, diplomatic wins, economic progress, etc.)

These summaries are saved in MongoDB within the same document, under the summaries field.

3. Summaries Evaluation (via Opik)

Each summary is evaluated against its source document to check quality, factuality, and semantic relevance.

✅ Metrics used:

BERTScore – evaluates semantic similarity

Cosine Similarity – compares vector embeddings of the summary vs. article

🔍 Results are observable in Opik, with metrics like F1, precision, and recall. You can detect high-performing vs. weak summaries for continuous improvement.

4. Vector Store for Retrieval

All documents and summaries are encoded using a sentence embedding model and saved to the vector_collection in MongoDB.

This enables RAG systems to:

Retrieve the most relevant chunks at query time

Provide grounded answers from actual country-specific content

5. Question-Answer (Q&A) Evaluation

To assess how well the system performs in real usage scenarios:

A dataset of Q&A pairs is generated or curated using ZenML (

Q&A DATASET STEP).Evaluation includes:

Answer Relevance

Hallucination Detection

📈 All metrics and insights are tracked and visualized using Opik.

Use Cases

Educational bots for African history, geography, and politics.

Government info systems for public awareness and transparency.

Cultural knowledge preservation using multi-modal summaries.

Grounded content generation for digital encyclopedias or assistant applications.

Outcomes

The result is a robust, transparent, and continuously improvable AI system capable of understanding, summarizing, and answering questions about African nations without relying on opaque or hallucinated model behavior. This architecture emphasizes auditability, factual grounding, and domain-specific relevance, and can be extended to other regions or domains with minimal adaptation.

References

https://github.com/benitomartin/llm-observability-opik/tree/main

https://www.amazon.com/LLM-Engineers-Handbook-engineering-production/dp/1836200072

https://en.wikipedia.org/wiki/Main_Page